Vmware Test Disk Align

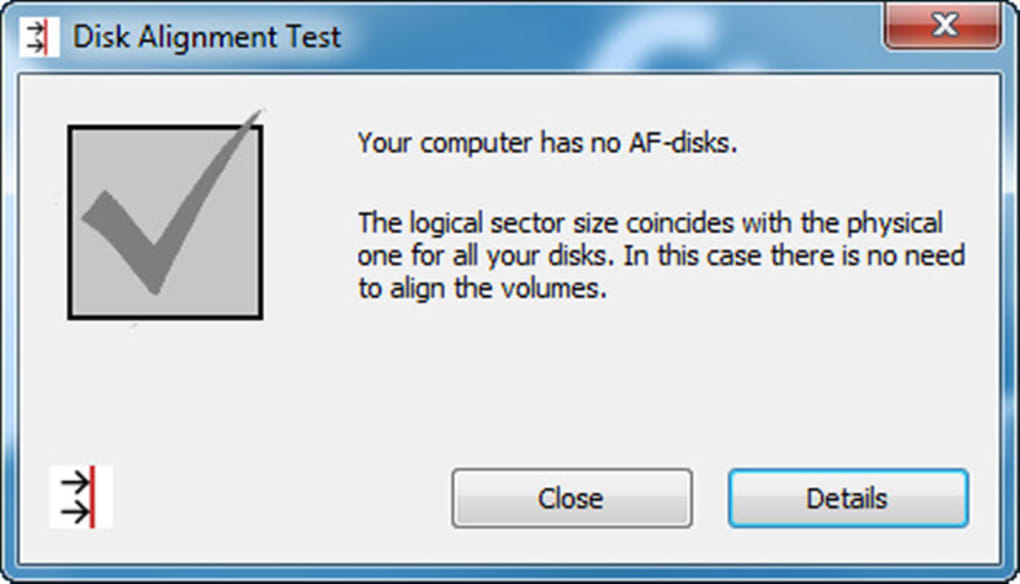

Jun 11, 2015 Quick ways to check disk alignment with ESXi and Windows VM’s. Posted on June 11, 2015 Updated on June 11, 2015. There are two simple checks a virtual infrastructure (VI) admin should be doing to ensure ESXi Datastores and the Windows VM’s are properly aligned. How to align VMWARE Virtual Disk. 2) Edit the “–flat” virtual disk file of the VM using fdisk. #fdisk./ WinXP32temp01-flat.vmdk Enter in expert mode by typing “x” and then “c” to enter the number of cylinders equal to the value of “ddb.geometry.cylinders”, which was “1044” in this example. The tool is free and it's simly called VM Check Alignment. Place the executable in your VM that you want to test and click the big button Check alignment. In the pane bellow you'll see the report saying that either the VM is not aligned or VM is aligned. As simple as that.

I have a server with ESXi 5 and iSCSI attached network storage. The storage server has 4x1Tb SATA II disks in Raid-Z on freenas 8.0.4. Those two machines are connected to each other with Gigabit ethernet, isolated from everything else. There is no switch in between. The SAN box itself is a 1U supermicro server with a Intel Pentium D at 3 GHz and 2 Gigs of memory. The disks are connected to a integrated controller (Intel something?).

The raid-z volume is divided into three parts: two zvols, shared with iscsi, and one directly on top of zfs, shared with nfs and similar.

I ssh'd into the freeNAS box, and did some testing on the disks. I used ddto test the third part of the disks (straight on top of ZFS). I copied a 4GB (2x the amount of RAM) block from /dev/zero to the disk, and the speed was 80MB/s.

Other of the iSCSI shared zvols is a datastore for the ESXi. I did similar test with time dd . there. Since the dd there did not give the speed, I divided the amount of data transfered by the time show by time. The result was around 30-40 MB/s. Thats about half of the speed from the freeNAS host!

Then I tested the IO on a VM running on the same ESXi host. The VM was a light CentOS 6.0 machine, which was not really doing anything else at that time. There were no other VMs running on the server at the time, and the other two 'parts' of the disk array were not used. A similar dd test gave me result of about 15-20 MB/s. That is again about half of the result on a lower level!

Of course the is some overhead in raid-z -> zfs -> zvolume -> iSCSI -> VMFS -> VM, but I don't expect it to be that big. I belive there must be something wrong in my system.

I have heard about bad performance of freeNAS's iSCSI, is that it? I have not managed to get any other 'big' SAN OS to run on the box (NexentaSTOR, openfiler).

Can you see any obvious problems with my setup?

5 Answers

To speed this up you're going to need more RAM. I'd start with these some incremental improvements.

Firstly, speed up the filesystem:1) ZFS needs much more RAM than you have to make use of the ARC cache. The more the better. If you can increase it at least 8GB or more then you should see quite an improvement.Ours have 64GB in them.

2) Next, I would add a ZIL Log disk, i.e. a small SSD drive of around 20GB. Use an SLC type rather than MLC. The recommendation is to use 2 ZIL disks for redundancy. This will speed up writes tremendously.

3) Add an L2ARC disk. This can consist of a good sized SSD e.g. a 250GB MLC drive would be suitable. Technically speaking, a L2ARC is not needed. However, it's usually cheaper to add a large amount of fast SSD storage than more primary RAM. But, start with as much RAM as you can fit/afford first.

There are a number of websites around that claim to help with zfs tuning in general and these parameters/variables may be set through the GUI. Worth looking into/trying.

Also, consult the freenas forums. You may receive better support there than you will here.

Secondly: You can speed up the network.If you happen to have multiple NIC interfaces in your supermicro server. You can channel bond them to give you almost double the network throughput and some redundancy.http://kb.vmware.com/selfservice/microsites/search.do?language=en_US&cmd=displayKC&externalId=1004088

MattMattSome suggestions.

- RAID 1+0 or ZFS mirrors typically perform better than RAIDZ.

- You don't mention the actual specifications of your storage server, but what is your CPU type/speed, RAM amount and storage controller?

- Is there a network switch involved? Is the storage on its own network, isolated from VM traffic?

I'd argue that 80 Megabytes/second is slow for a direct test on the FreeNAS system. You may have a disk problem. Are you using 'Advanced Format' or 4K-sector disks? If so, there could be partition alignment issues that will affect your performance.

What you are probably seeing is not a translation overhead but a performance hit due to a different access pattern. Sequential writes to a ZFS volume would simply create a nearly-sequential data stream to be written to your underlying physical disks. Sequential writes to a VMFS datastore on top of a ZFS volume would create a data stream which is 'pierced' by metadata updates of the VMFS filesystem structure and frequent sync / cache flush requests for this very metadata. Sequential writes to a virtual disk from within a client again would add more 'piercing' of your sequential stream due to the guest's file system metadata.

The cure usually prescribed in these situations would be enabling of a write cache which would ignore cache flush requests. It would alleviate the random-write and sync issues and improve the performance you see in your VM guests. Keep in mind however that your data integrity would be at risk if the cache would not be capable of persisting across power outages / sudden reboots.

You could easily test if you are hitting your disk's limits by issuing something like iostat -xd 5 on your FreeNAS box and looking at the queue sizes and utilization statistics of your underlying physical devices. Running esxtop in disk device mode also should help you getting a clue about what is going on by showing disk utilization statistics from the ESX side.

How can the answer be improved? May 02, 2019 In case you are getting 'MMC could not create the snap-in' error message while starting gpedit.msc, you may follow the steps below for the solution: Go to C:WindowsTempgpedit folder and make sure it exists. Download the following zip file and unzip it to C:WindowsTempgpedit. Windows 10 does not have gpedit. The bottom line is that the gpedit.msc isn't installed within a Home version of Windows by default. It is essentially a professional's management tool, and is included in that version accordingly. It is essentially a professional's management tool, and is included in that version accordingly.

the-wabbitthe-wabbitI currently use FreeNas 8 with two Raid 5 sSata arrays attached off the server.The server has 8GB of ram and two single core Intel Xeon processors.

My performance has been substantially different to what others have experienced.

I am not using MPIO or any load balancing on NICs.Just a single Intel GIGE 10/100/1000 server NIC.

Both arrays have five 2.0TB drives equating to roughly 7.5 TB of space RAID5.

I utilize these two arrays for two different functions:

1) Array #1 is attached to an Intel HPC server running Centos 5.8 and PostGres.The file system is ext4.I have been able to get a peak of 800 Mbps/sec to this array.

2) Array #2 is being used for Citrix Xenserver 6 Centos VMs.These 200GB drive partitions are providing outstanding performance.Each of the VMs are running real-time SIP signaling servers that are supporting 5-10K concurrent calls at 500-1000 CPS.The local database writes the CDRs to these partitions before the main database server copies them into it's tables.I have been able to also get a peak of 800 Mbps/sec to this array.

Recuva For Mac

Now, I would not suggest using a FreeNas iSCSI array as my mainstay solution for large database partitions. I have that running on a 10K RPM SAS Raid 10 partition on the database server.

But, there is absolutely no reason that you cannot send your data traffic across a simple switched Ethernet network to a reasonably configured server running FreeNAS and send it at the theoretical peak of GIGE.

I have yet to test the read throughput, but RAID5 is slower on reads. So, it should be as good or better.

FreeNAS consistently scales well as more traffic demands are made of it.Any CentOS 5.8 server is going to use it's own cache to buffer the data before sending it to the iSCSI arrays. So, make sure you have ample memory on your VM hosts and you will be happy with your performance.

Nothing tests a technology better than database applications and real-time traffic applications in my opinion.

I too think that adding a system memory write-through cache feature would be beneficial, but my performance numbers show that FreeNAS and iSCSI are performing stellar!

It can only get better.

First - VMware performance is not really an issue of iSCSI (on FreeNAS) or NFS 3 or CIFS (windows) protocol, its an issue of XFS filesystem writes and the 'sync' status.

FreeNAS has a property called 'sync' and it can be set on or off. 'zfs sync=always' is set by default and causes every write to be flushed. This dramatically slows performance but guarantees disk writes. For example, running VMware ESXI 5.5 and FreeNAS on modern equipment (3.x GHZ CPU, Seagate 7200 HD, 1GigE net) without strain typically results in 4-5MB/sec performance on a VMware clone or Windows robocopy or other 'write' operation. By setting 'zfs sync=disabled ' the write performance easily goes to 40MBs and as high as 80Mbs (that's Megabytes per second). Its 10x-20x faster with sync-disabled and is what you would expect.. BUT the writes are not as safe.

SO, I use sync=disabled 'temporarily' when I want to do a bunch of clones or signification robocopy etc. Then I reset sync=always for 'standard' VM operation.

FreeNAS has a 'scrub' that will verify all the bytes on the disk.. takes about 8hrs for 12TB and I run it once a week as a followup to make sure that bytes written during sync=disabled are OK.